What is OpenStack

OpenStack is an open-source cloud computing platform that allows users to create and manage virtual machines and other cloud resources. One of the key components of OpenStack is Cinder, which is the block storage service. Cinder allows users to create and manage block storage volumes for their cloud instances.

What is CEPH

Ceph, on the other hand, is a distributed storage system that provides scalable and reliable storage for cloud environments. Ceph can be used as a backend for Cinder, allowing users to store their block storage volumes on Ceph clusters.

Storage Solution for OpenStack

Together, Cinder and Ceph provide a powerful storage solution for OpenStack environments. Cinder provides the interface for creating and managing block storage volumes, while Ceph provides the underlying storage infrastructure. This allows users to easily manage their storage resources within OpenStack and scale their storage infrastructure as needed. Additionally, Trilio’s customized tool makes it easier to efficiently back up and protect data stored in Ceph-based volumes within OpenStack.

Customer Challenge

A customer came to us recently and explained they have two very important issues with their current data protection solution.

High resource consumption

High Resource Consumption (CPU and RAM) of the data protection tool, which is running in VMs, inside OpenStack itself. This was causing problems on customer’s guest’s VMs also running in OpenStack

High amount of storage consumed

High amount of storage consumed by Cinder snapshots. These snapshots were consuming all Ceph storage, which made the protection of the storage used by the VMs cumbersome.

The Approach

We took a different approach which helps our customers solve these two problems. First I will explain why we only use the needed storage in the Ceph back-end, so we don’t disrupt your storage backend.

In the next lines, you will see some details on how we do a backup, and what that looks like from the Ceph point of view, and I hope it makes clear that we are an extremely efficient tool.

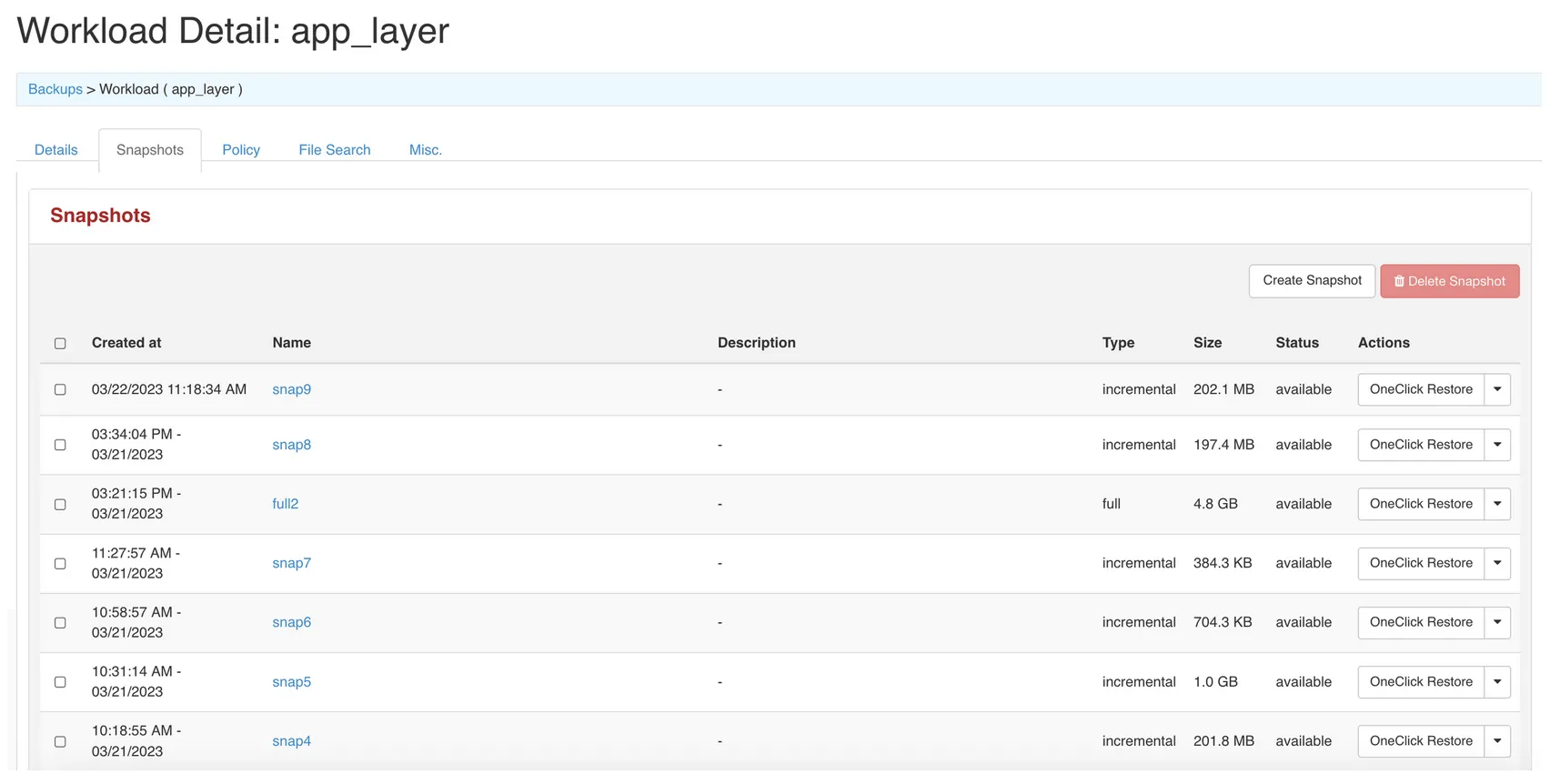

In my lab, I am going to use Red Hat OpenStack 16.2 to demonstrate this, and I have one workload, which is backing up the VM app-server1, which has a 1 20GB volume in Ceph. I have two full backups and several snapshots:

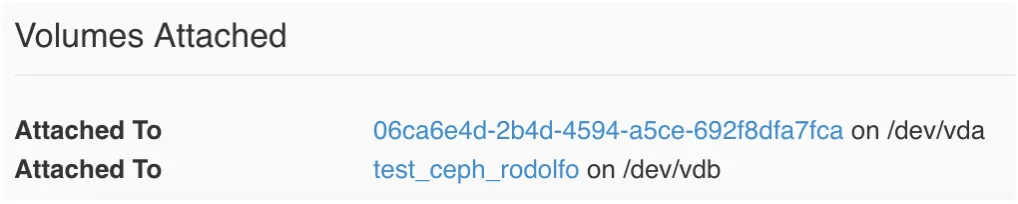

The instance has two volumes, the boot disk (1 GB) and the Ceph volume test_rodolfo_ceph(20GB)

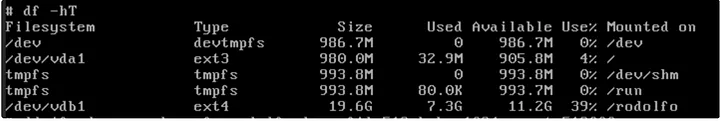

The 20GB volume is mounted on /rodolfo

I am going to add another file to that volume, of size 512MB, called largefile512mb.

Now we can see the size is 512MB bigger:

Next, you can see the process where the file is being created, so we can see the growing 512MB inside the volume volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39. At this moment there is one snapshot snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 with ID 132, which is going to track all changed blocks:

[root@Node1 ~]# while true; do rbd -p volumes du volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39; rbd snap ls volumes/volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39; sleep 10; done NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 20 MiB <TOTAL> 20 GiB 8.6 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 20 MiB <TOTAL> 20 GiB 8.6 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 20 MiB <TOTAL> 20 GiB 8.6 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 20 MiB <TOTAL> 20 GiB 8.6 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 112 MiB <TOTAL> 20 GiB 8.6 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 112 MiB <TOTAL> 20 GiB 8.6 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 112 MiB <TOTAL> 20 GiB 8.6 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 216 MiB <TOTAL> 20 GiB 8.7 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 216 MiB <TOTAL> 20 GiB 8.7 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 216 MiB <TOTAL> 20 GiB 8.7 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 264 MiB <TOTAL> 20 GiB 8.8 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 300 MiB <TOTAL> 20 GiB 8.8 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 300 MiB <TOTAL> 20 GiB 8.8 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 300 MiB <TOTAL> 20 GiB 8.8 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 384 MiB <TOTAL> 20 GiB 8.9 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 384 MiB <TOTAL> 20 GiB 8.9 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 384 MiB <TOTAL> 20 GiB 8.9 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 484 MiB <TOTAL> 20 GiB 9.0 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 484 MiB <TOTAL> 20 GiB 9.0 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 484 MiB <TOTAL> 20 GiB 9.0 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 528 MiB <TOTAL> 20 GiB 9.0 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 528 MiB <TOTAL> 20 GiB 9.0 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023

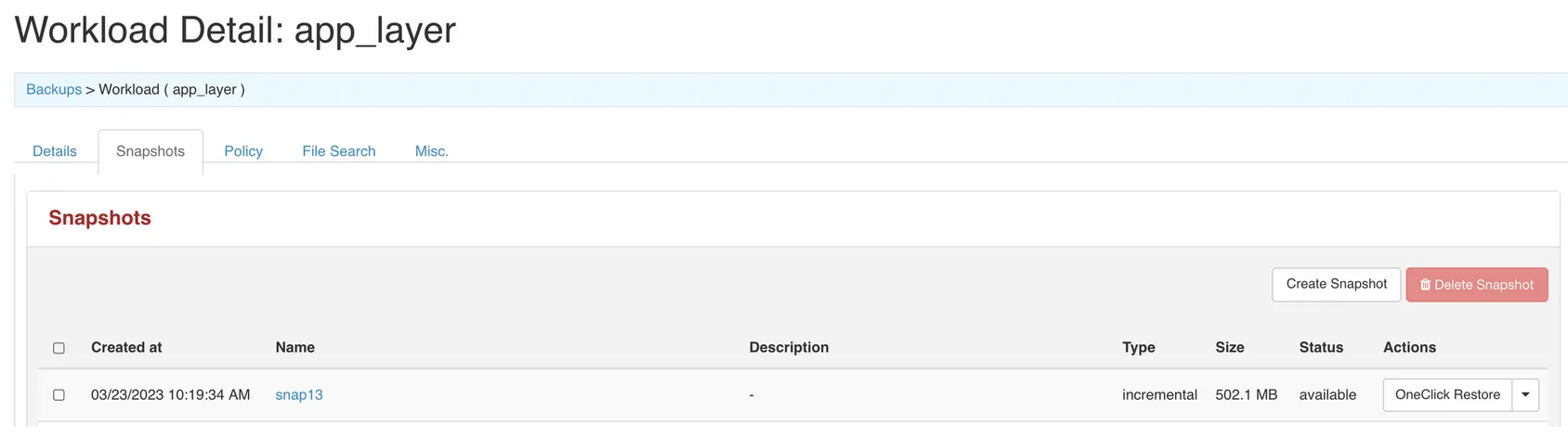

Then, when that process is completed, I launch an incremental snapshot, where you can see a second snapshot being created, and I explain below what happened:

[root@Node1 ~]# while true; do rbd -p volumes du volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39; rbd snap ls volumes/volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39; sleep 10; done NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB 528 MiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 0 B <TOTAL> 20 GiB 9.0 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 136 snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB yes Thu Mar 23 15:49:37 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB 528 MiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 0 B <TOTAL> 20 GiB 9.0 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 136 snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB yes Thu Mar 23 15:49:37 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB 528 MiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 12 MiB <TOTAL> 20 GiB 9.1 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 136 snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB yes Thu Mar 23 15:49:37 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB 528 MiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 12 MiB <TOTAL> 20 GiB 9.1 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 136 snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB yes Thu Mar 23 15:49:37 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB 528 MiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 12 MiB <TOTAL> 20 GiB 9.1 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 136 snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB yes Thu Mar 23 15:49:37 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB 528 MiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 12 MiB <TOTAL> 20 GiB 9.1 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 136 snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB yes Thu Mar 23 15:49:37 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB 528 MiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 12 MiB <TOTAL> 20 GiB 9.1 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 136 snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB yes Thu Mar 23 15:49:37 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB 528 MiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 12 MiB <TOTAL> 20 GiB 9.1 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 136 snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB yes Thu Mar 23 15:49:37 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB 528 MiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 12 MiB <TOTAL> 20 GiB 9.1 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 136 snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB yes Thu Mar 23 15:49:37 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB 8.5 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB 528 MiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 12 MiB <TOTAL> 20 GiB 9.1 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 132 snapshot-d3112ba1-2214-48a8-8e54-727c98e7b1d1 20 GiB yes Thu Mar 23 15:33:28 2023 136 snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB yes Thu Mar 23 15:49:37 2023 NAME PROVISIONED USED volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39@snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB 9.0 GiB volume-388aeefa-2d79-4625-91d5-00ba9b5ffc39 20 GiB 12 MiB <TOTAL> 20 GiB 9.0 GiB SNAPID NAME SIZE PROTECTED TIMESTAMP 136 snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d 20 GiB yes Thu Mar 23 15:49:37 2023

When the incremental backup is launched, a second snapshot is created snapshot-13954e5d-8cb0-45f6-a731-9b7e9007291d with ID 136. The previous snapshot with ID 132 is compared to the one with ID 136. We get the difference from Ceph API, and those block changes are what we move to the backup target. After all changed blocks are backed up, we remove the old snapshot and keep the new one ( 0 bytes at creation time), so all storage used by the previous snapshot is freed up.

Last, but not least, we can see the size of the incremental backup is exactly 512MB, the size of the file we added previously

Explanation

Detailed explanation:

Whether we do full or incremental, we pass the Ceph snapshot id to qemu-img command. Qemu-img command directly reads from the snapshot without having the need to mount the snapshot.

We modified qemu-img command to take the list of blocks to read from the snapshot to create the QCOW2 image. We get the list of blocks to read from the rbd diff command.

So at any given point, we don’t have a mount of Ceph snapshots to compute or containers.

Step-by-step workflow:

Full Backup

Full Backup:

-

- DataMover (the Trilio service container running on the OpenStack Compute host) communicates directly with Ceph and performs a snapshot (rbd snapshot)

-

rbd diff snapshot1, and we get a json file that includes all the extents that were allocated (full backup) or changed (for incremental backups) # which yields the list of blocks that were allocated for the volume

-

qemu-img convert <list of blocks> <ceph-snapshot1> <backup qcow2> --> qemu-imgthe command has inherited support for rbd volumes. It can take ceph.conf and keyid and the rbd image or rbd image snapshot path to perform the QCOW2 operations

Incremental Backup

Incremental Backup

-

- Take a Ceph snapshot, say snapshot2 (rbd snapshot)

-

rbd diff snapshot2 --snap-from snapshot1# which yields blocks that were changed since snapshot1

-

qemu-img convert <list of blocks> <ceph snapshot2> <backup qcow2>

-

- delete snapshot1

-

- snapshot2 becomes snapshot1

Repeat Incremental backup logic with every incremental backup

Types of Backup

Full backup: The most basic and comprehensive backup method, where all data is sent to another location.

Incremental backup: Backs up all files that have changed since the last backup occurred

Conclusion

In summary, there are no temporary volumes when the back-end storage is Ceph. There is a snapshot that will keep track of the changed blocks. Then when an incremental is launched, we create a new snapshot. We will then run an rbd diff between the two snapshots, and we get a json file that includes all the extents that were changed (for incremental backups), which yields the list of blocks that were allocated for the volume. So at any given point, we don’t have a mount of Ceph snapshots to compute or containers.

Overall Trilio’s approach to Ceph-based Cinder volume backups is optimized for storage, network, and resource performance. We consume less storage than other solutions and also use less network bandwidth to move the backups off-site, which yields a better use of compute resources.

Another concern this customer had was that the VMs, used by their existing traditional heterogeneous data protection solution, was taking a lot of resources from their OpenStack platform. Our approach to this issue was this: We optimize for OpenStack and therefore Trilio for OpenStack was designed to run the DataMover service as containers on the physical Compute hosts, bypassing the need to consume valuable virtual machines resources.

Hypervisors (that run your VMs) always run at a higher privilege and priority than to the Trilio DataMover containers, thus ensuring we do not interfere with your virtual workloads.

FAQs

What is the role of Ceph in providing efficient storage solutions for cloud environments?

OpenStack integrates with Ceph to deliver scalable and reliable storage for cloud computing. Cinder, the block storage component of OpenStack, interfaces with Ceph, using it as a backend to provide and manage block storage volumes for virtual machines. This combination ensures users can efficiently scale and control their cloud storage infrastructure.

How does Trilio interact with Ceph to improve the storage consumption instead of using Cinder snapshots?

Ceph tackles high storage consumption through incremental backups, interacting with the Ceph API. When a new Ceph snapshot is created, a comparison between the previous and current snapshots identifies the changed data blocks. Only these blocks are backed up, reducing storage consumption. The old snapshot is then deleted, freeing up resources. This optimized backup strategy minimizes storage requirements and ensures efficient utilization.

My OpenStack environment isn't running Ceph for block storage. Can I still benefit from Trilio's optimization approach?

The core principles of backend storages are similar in many solutions, so Trilio can optimize most of them. The focus on efficient change tracking and minimizing unnecessary data movement translates to improved backup performance even with different storage technologies. Speak with an expert to ask whether your specific environment is supported by Trilio.

How does Trilio handle scaling in large OpenStack deployments with Ceph clusters?

Trilio’s architecture is designed to scale with your OpenStack environment. You can deploy additional DataMover containers on your compute nodes as needed, automatically, ensuring that backup and recovery operations are distributed for optimal performance. Trilio supports integrating with existing OpenStack load balancing and resource management mechanisms.

Can Trilio back up other components of my OpenStack environment beyond just the Ceph-based Cinder volumes?

Yes, Trilio provides comprehensive backup and recovery capabilities for your entire OpenStack environment, covering instances (Nova), configurations (Metadata), networking (Neutron), etc. This provides a unified protection solution for your OpenStack deployment.