OpenShift, Red Hat’s enterprise-grade Kubernetes platform, has become the cornerstone for organizations embracing containerization. Its ability to streamline application development, deployment, and scaling across hybrid and multi-cloud environments is undeniable. However, successful OpenShift deployment is far from a walk in the park. The intricacies of container orchestration, data management, and maintaining high availability can quickly overwhelm even experienced IT teams.

The stakes are high: A poorly executed OpenShift deployment can lead to downtime, data loss, and missed business opportunities. As organizations increasingly rely on OpenShift to power their critical applications, the need for a strategic and resilient approach to deployment becomes essential. This is where understanding the nuances of the platform and implementing best practices become essential.

Why OpenShift Deployment is Mission-Critical (But Not Always Easy)

OpenShift isn’t just another platform—it’s a strategic enabler for businesses seeking agility and innovation. Through the power of containerization and Kubernetes, OpenShift empowers organizations to:

- Accelerate Application Delivery: OpenShift’s streamlined deployment pipelines and standardized environments significantly reduce the time it takes to get applications from development to production. This means faster time to market, improved responsiveness to customer needs, and a competitive edge.

- Optimize Resource Utilization: OpenShift’s efficient orchestration of containers ensures that resources are allocated dynamically, maximizing utilization and minimizing waste. This translates to cost savings and the ability to scale applications effortlessly.

- Enhance Portability and Flexibility: OpenShift’s consistent platform across on-premises, hybrid, and multi-cloud environments provides unparalleled flexibility. Applications can be seamlessly migrated, deployed, and managed across diverse infrastructures, reducing vendor lock-in and enabling organizations to adapt to evolving business requirements.

OpenShift Deployment Challenges

While the benefits of OpenShift are undeniable, the path to a successful deployment often has challenges:

- Complexity: The sheer number of components involved in an OpenShift deployment—from pods and services to routes and persistent volumes—can be overwhelming. Configuring, managing, and troubleshooting these components require specialized expertise. Red Hat offers comprehensive documentation to help users understand these concepts and navigate the intricacies of the platform.

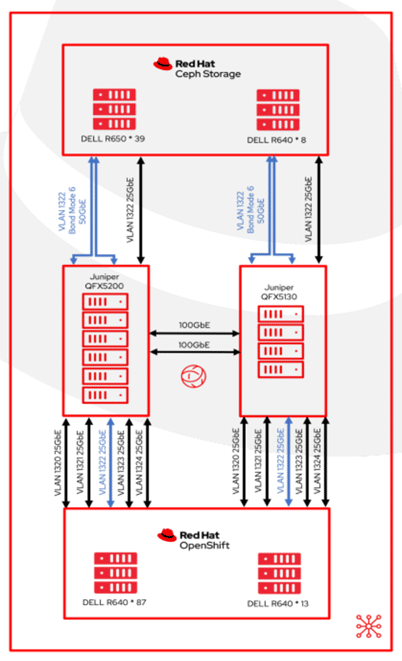

- Scalability: As applications grow and user demands increase, scaling OpenShift deployments can become a complex undertaking. Ensuring optimal performance and resource allocation while maintaining application availability requires careful planning and continuous monitoring. For instance, in a large-scale deployment scenario, a 100-node OpenShift cluster successfully managed 3,000 virtual machines (VMs) and 21,400 pods, demonstrating the platform’s capacity to handle substantial workloads. However, such deployments require careful planning and optimization to prevent performance bottlenecks and ensure seamless scalability.

- Data Protection: OpenShift’s dynamic nature poses unique challenges for data protection. Traditional backup solutions may not be equipped to handle containers or the complexities of stateful applications.

Data protection is about backups, but more than that, it’s about ensuring the resilience and availability of the applications in your OpenShift environment. A robust data protection strategy is essential for minimizing downtime, mitigating data loss, and maintaining business continuity in the face of unexpected disruptions.

A Strategic Approach to OpenShift Deployment

Given the complexities and risks associated with OpenShift deployment, a strategic approach is essential to ensure success. This involves a multi-faceted strategy encompassing planning, architecture, best practices, and a focus on data protection.

Planning and Architecture

Before diving into the technical details of OpenShift deployment, take a step back and consider the bigger picture. Align your OpenShift deployment with your overall business goals and IT strategy. Determine which applications are best suited for containerization, how they will integrate with existing systems, and what your scalability requirements are.

Next, carefully architect your OpenShift environment. This includes designing your cluster topology, selecting appropriate storage solutions, and configuring networking components. Red Hat provides architectural guidance based on your specific needs.

OpenShift Deployment Best Practices

Once you have a solid plan and architecture in place, it’s time to implement OpenShift deployment best practices. Here are a few key tips:

- Automation: Embrace automation tools to streamline repetitive tasks, reduce human error, and ensure consistency across your OpenShift environments.

- Monitoring: Implement robust monitoring solutions to proactively gain visibility into the health and performance of your OpenShift cluster and applications.

- Security: Prioritize security from the outset. Follow OpenShift security guidelines, implement role-based access control (RBAC), and regularly scan for vulnerabilities.

- Upgrades and Updates: Keep your OpenShift environment up to date with the latest patches and security fixes. Regularly test your upgrade procedures to ensure a smooth transition. For example, the impact of OpenShift cluster upgrades on a large-scale deployment with 3,000 VMs and 21,400 pods on minor upgrades takes 35 minutes, while major upgrades can take 136 minutes, highlighting the need for careful planning and testing to minimize disruption during upgrades.

Integrating Data Protection

One of the most critical aspects of any OpenShift deployment strategy is data protection. As your applications generate and consume valuable data, safeguarding that data becomes vital. This includes not only regular backups but also disaster recovery planning and the ability to quickly restore applications and data in the event of an outage.

While OpenShift offers some built-in data protection mechanisms, such as persistent volumes and snapshots, they may not be sufficient for all use cases. Consider integrating a comprehensive data protection solution that is specifically designed for OpenShift environments. For example, optimizing Ceph storage, a popular choice for OpenShift, involves careful tuning of placement groups (PGs) and Prometheus settings. Such a solution should provide:

- Application-Centric Backups: The ability to capture and restore entire applications, including their configurations, dependencies, and data, in a single operation.

- Granular Recovery: The flexibility to restore individual components or specific data sets rather than entire applications.

- Scalability: The capacity to scale data protection as your OpenShift environment grows.

By integrating data protection into your OpenShift deployment strategy, you can ensure that your applications and data are resilient in the face of unexpected disruptions. Trilio is a comprehensive data protection platform designed specifically for Kubernetes environments, including OpenShift. With Trilio, you can easily back up and restore your entire application environment, ensuring business continuity and minimizing downtime. Schedule a demo today to see Trilio in action.

Data Resilience: The Unsung Hero of OpenShift Success

In OpenShift, where containers spin up and down while applications scale on demand, data resilience is a critical factor for success. But what exactly is data resilience, and why does it matter so much for OpenShift environments?

Data resilience goes beyond the traditional concept of backups. It encompasses a holistic approach to safeguarding your application data, ensuring its availability, integrity, and recoverability in the face of any disruption. This includes not only protection against hardware failures and natural disasters but also the ability to withstand cyberattacks, human errors, and even application misconfigurations.

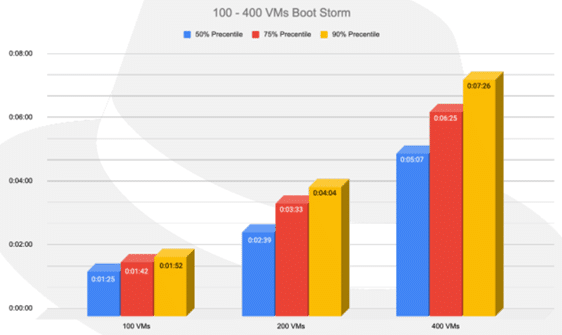

Data resilience is particularly crucial for OpenShift environments due to the ephemeral nature of containers. Unlike traditional virtual machines, containers are designed to be lightweight and disposable. This means that if a container fails, its data can be lost unless proper precautions are taken. Furthermore, the distributed nature of OpenShift clusters adds another layer of complexity to data protection. The significance of data resilience is highlighted by detailing tests like VM migration (involving 1,000 VMs) and boot storms (up to 1,000 VMs). These scenarios, common in disaster recovery situations, highlight the need for robust data protection mechanisms to minimize downtime and data loss.

Trilio: Empowering Data Resilience for OpenShift

Trilio, a leading provider of OpenShift data protection solutions, recognizes the unique challenges of OpenShift environments. Trilio offers a comprehensive platform for backup, recovery, migration, and disaster recovery that is specifically tailored for Kubernetes and OpenShift.

Here are some of the key features of Trilio:

- Application-Centric Protection: Trilio understands the relationships among Kubernetes objects, ensuring that applications are backed up and restored as a whole, including their configurations, dependencies, and data.

- Agentless Architecture: Trilio operates without requiring agents to be installed on every node, simplifying deployment and management.

- Granular Recovery: Trilio enables the granular recovery of individual Kubernetes objects, namespaces, or entire applications, providing flexibility and minimizing downtime.

- Scalability: Trilio is designed to scale effortlessly as your OpenShift environment grows, ensuring that your data protection capabilities keep pace with your business needs.

- Cloud-Native Integration: Trilio integrates seamlessly with popular cloud providers and storage solutions, providing a unified data protection platform for hybrid and multi-cloud environments.

Schedule a demo to learn how Trilio is helping organizations achieve data resilience and unlock the full potential of their OpenShift investments.

Conclusion: Future-Proof Your OpenShift Investments with Trilio

OpenShift is a powerful tool for businesses seeking to accelerate innovation and streamline application delivery. However, the complexities of OpenShift deployment, coupled with the critical importance of data resilience, mean that a strategic approach is needed. In this article, we explored the key challenges and considerations associated with OpenShift deployment. We emphasized the importance of planning, architecture, best practices, and most importantly, data protection.

Trilio offers a comprehensive suite of features designed to address the unique needs of OpenShift, including application-centric protection, agentless architecture, granular recovery, scalability, and seamless cloud-native integration.

Don’t leave the success of your OpenShift deployment to chance. Embrace a proactive approach to data resilience with Trilio. Schedule a demo to discover how Trilio can help you future-proof your OpenShift deployment.

FAQs

What are the key factors to consider when planning an OpenShift deployment?

Successful OpenShift deployment hinges on a well-defined strategy that aligns with your business goals. Before embarking on your OpenShift journey, assess your application portfolio to identify suitable candidates for containerization. Consider factors like application architecture, dependencies, and scalability requirements. Additionally, carefully architect your OpenShift environment, including cluster topology, storage solutions, and networking configurations. A thorough understanding of your infrastructure and application landscape will lay the groundwork for a seamless OpenShift deployment.

How can I ensure the security of my OpenShift deployment?

Embrace a defense-in-depth approach that includes securing the underlying infrastructure, implementing role-based access controls (RBAC), and regularly scanning for vulnerabilities. Leverage OpenShift’s built-in security features, such as network policies and security context constraints, to restrict traffic and control access to sensitive resources. Additionally, consider integrating third-party security solutions to enhance threat detection and incident response capabilities.

What role does automation play in OpenShift deployment and management?

Automation is a game-changer for OpenShift deployments. Automating repetitive tasks—such as provisioning infrastructure, deploying applications, and scaling resources—lets you significantly reduce the risk of human error and accelerate your time to market. Tools like Ansible and Terraform can be invaluable for managing complex OpenShift environments, ensuring consistency, and simplifying day-to-day operations.

How can I achieve seamless scalability for my OpenShift deployment?

Scalability is a hallmark of OpenShift, but it requires careful planning and execution. Start by designing your applications with scalability in mind, utilizing microservices architectures and horizontal scaling principles. Leverage OpenShift’s autoscaling features to dynamically adjust resources based on demand. Implement robust monitoring and alerting mechanisms to proactively identify performance bottlenecks and trigger scaling actions.

Can I migrate existing applications to OpenShift?

Yes, OpenShift is designed to accommodate a wide range of applications, including those that may have been developed for traditional virtualized environments. However, migrating existing applications to OpenShift may require some refactoring or rearchitecting to align with containerization principles. Assess the complexity of your applications and prioritize those that will benefit most from containerization. OpenShift’s extensive documentation and community resources provide guidance on migration best practices and tools.